|

Author

|

Topic: APA School Curriculum Standardization

|

blalock

Member

|

posted 07-16-2009 02:08 PM

posted 07-16-2009 02:08 PM

Upon reviewing our state's (FL) "Principles and Standards of Practice" I came across the following paragraph:27. No examiner shall conduct an examination utilizing a specific issue polygraph technique, which has not been validated by an existing body of acceptable scientific studies. An impressive standard, until the other shoe dropped... A polygraph technique, which has been taught in an accredited polygraph school, shall be presumed to be a validated testing technique. Now, as long as all "accredited" polygraph schools teach only validated testing techniques, then we are fine. However, if for some reason, they are teaching some unvalidated testing techniques, then look out... ------------------

Ben blalockben@hotmail.com IP: Logged |

detector

Administrator

|

posted 07-16-2009 10:52 PM

posted 07-16-2009 10:52 PM

Ahh the discombobulated world of polygraph.IP: Logged |

rnelson

Member

|

posted 07-17-2009 11:34 AM

posted 07-17-2009 11:34 AM

It is important to remember what exactly we mean when we have these conversations about validty.What does it mean to say that something is valid? What types of things can be valid? Notice the word "be" refering to the state of existence, which infers a material property. All of this become inaccurately implicit in our language and conceptual understanding if we are not careful and thoughtful. Say someone comes up with a new and fancy hypothesis. It might be valid. It might also be invalid. The validity is unkown, but it is what it is. Our job is to learn about about the validity of the new and fancy idea. Validity itself is not a thing or material substance. Things or ideas are not valid, in the sense that we are not trying to say that this is that in any physical or material sense. Validity, in science, is an amorphous and intangible property or quality of an idea or hypothesis. It is possible that all ideas are valid, and all ideas are invalid to some degree, more or less. Our job, as scientists, is to collect data and conduct measurement and mathematically based experiments that become evidence in support of or against the validity of an idea or hypothesis. In a concrete sense we might say that an experiment or study validates an idea (technique, scenario, scoring methods, etc.) It would more correct, though, to state more cautiously that a study or experiment provides support for the validity of the idea, or does not support (refutes) the validity of the idea. Fancy ideas (hypothesis) can also be refuted on their face validity, if the logic or conceptual idea does not fit together parsimoniously with existing knowledge. Don't waste time and money trying to validate ideas that don't fit together with what we already know. Sometimes, when we get into the weeds, we encounter an unexpected experimental result that challenges or goes against what we thought we already knew - even thought we had previous experimental evidence that supported the validity of what we thought we knew. It happens. For centuries, since Newton and Galileo, people were proving and disproving ideas, and it all seemed to fit together in a parsimonious and consistent theoretical framework that we call "physics." Then, as they deeper into the weeds, they find odd things, at the sub-atomic level, that don't seem to follow the existing laws of physics. Do they throw out the old or the new ideas? Neither. The smart thing to do is to recognize that we need to broaden our conceptual map so that we can develop a new and integrated understand of the old and new ideas together. A new theoretical framework that begins to parsimoniously integrate Newtonian physics with sub-atomic phenomena. Hmm. This has happened over and over. We should learn from it. A previous example of this same issue was the black-swan problem. For a time everyone assumed that all swans were white, because all the evidence to a certain time suggested that to be the case. The evidence supported the validity of the idea. But then someone found some black-swans somewhere. Where they wrong the whole time, in assuming that all swans are white? To the degree that people made concrete assertions about the validity of the idea that all swans are white - they were wrong all along. To the degree that they made responsible scientific judgments about what the evidence had told them so far (the data seem to support the validity of the hypothesis that all swans are white), they were correct - even though black swans existed somewhere. Validity is not a thing, and it is not a simple matter of declaration. It is an intangible property that is inferred through available evidence from credible and repeatable scientific studies. The more studies, the more evidence, and more replication, the smarter we are. We also know from experience, to not be surprised when we eventually begin to encounter paradoxical findings. It simply means that we don't know everything yet, we never will, and that we have to broaden our thinking once again. .02 r ------------------

"Gentlemen, you can't fight in here. This is the war room."

--(Stanley Kubrick/Peter Sellers - Dr. Strangelove, 1964)

IP: Logged |

lietestec

Moderator

|

posted 07-27-2009 05:18 PM

posted 07-27-2009 05:18 PM

To add to Ray's post concerning validity - remember that many polygraph schools are still teaching the Army MGQT (we quit teaching it 3 years ago) which would fall under the "validated" umbrella; however, it is only validated at 25% on NDI examinees and an overall "validity" of about 61% - not much better than voice stress. Voice stress has been validated as well - at less than 50% - but it is "validated" nonetheless.If you take a validated format; e.g., the Utah Singel-Issue, and need to add an irrelevant question becuase of an artifact or desire to establish a tonic level before the next question presentation, then, technically, what you did is different from the validation studies that established it as being 91% valid, and there are those purists that would say your test, as run, is invalid. ASTM and APA had the same type of ststemnet at one time as to use of validated techniques or formats; however, they gave no reference as to what formats or techniques were actually "valid." When Don Krapohl did a search for APA to find how many different polygraph techniques or formats were actually being used by examiners - he stopped counting at 190+. I'm sure that a lot of those examiners considered that what they were using was "valid" - because, after all, the technique or format "worked" - whatever that means - the examinee didn't die or the instrument didn't explode, etc. As many of our detractors have stated over the years - we use a lot of important sounding jargon in this field, and, until recently, we have had little to back-up what we say. IP: Logged |

Buster

Member

|

posted 07-27-2009 07:15 PM

posted 07-27-2009 07:15 PM

------------------------------------------

If you take a validated format; e.g., the Utah Singel-Issue, and need to add an irrelevant question becuase of an artifact or desire to establish a tonic level before the next question presentation, then, technically, what you did is different from the validation studies that established it as being 91% valid, and there are those purists that would say your test, as run, is invalid.

--------------------Quote-------------------I had a DACA instructor in a seminar a couple of years ago who said this. He said if you add an irrevelant to a Utah you are now doing a "Buster" not a Utah. I am an example --I guess-- as I am using Nate's Zone which is not validated. But you have to understand Nate's frustration as his technique was not listed, but a MGQT was listed with a published accuracy rate listed at 68%. I think Nate was working with Mr Krapohl to get him to list the IZCT as validated. [This message has been edited by Buster (edited 07-27-2009).] IP: Logged |

Barry C

Member

|

posted 07-27-2009 09:00 PM

posted 07-27-2009 09:00 PM

Thus the reason we need to get away from the errant "purist" view. The Utah researchers claim (and I agree) they have validated the CQT - any CQT that consists of CQs and RQs that were properly developed in a good pre-test, which makes for a loaded sentence.Adding an extra neutral won't invalidate the test unless you really don't understand what's going on to begin with. The Utah position - and one we need to adopt - is that the CQT has been so well tested that we know it works, and it is robust enough to withstand reasonable modifications such as an extra neutral. (In fact, they suggest extras if you have a really big cardio response, for example, that just keeps going.) Think of it this way: Science has validated the concept that we understand as gravity. We know that if we step off our top stair and don't get it right (from experience) that we'll find ourselves on the ground. We know what goes up must come down, in essence. I've never stepped off my roof, but I'm quite sure that gravity would work the same there, and I'm not going to test it. It's not just limited to our front stairs or diving boards, etc. We know enough about it to know it works. The "purists," however, would have us believe that the conclusion that gravity pulls us down from our roofs doesn't pass muster. It sounds crazy there doesn't it? It is, and it's just as crazy to think polygraph is invalid if a minor change is made. That's why, for example, the Matte test works. No, his inside / outside track doesn't do anything (which his own data shows, but the proponents ignore and fail to analyze.) However, that do nothing extra "track" doesn't invalidate the Matte test in the sense that it isn't an adequate CQT. (It's just extra work that doesn't pay any dividends - at least with its best users.) Now I don't suggest you go out and make up you own test. There are lots of rules, and not everybody knows what they are. Order effects, for example, could influence your test, and if you don't know what that means, you might weaken an otherwise good CQT. IP: Logged |

rnelson

Member

|

posted 07-27-2009 11:06 PM

posted 07-27-2009 11:06 PM

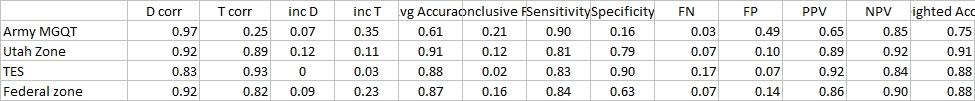

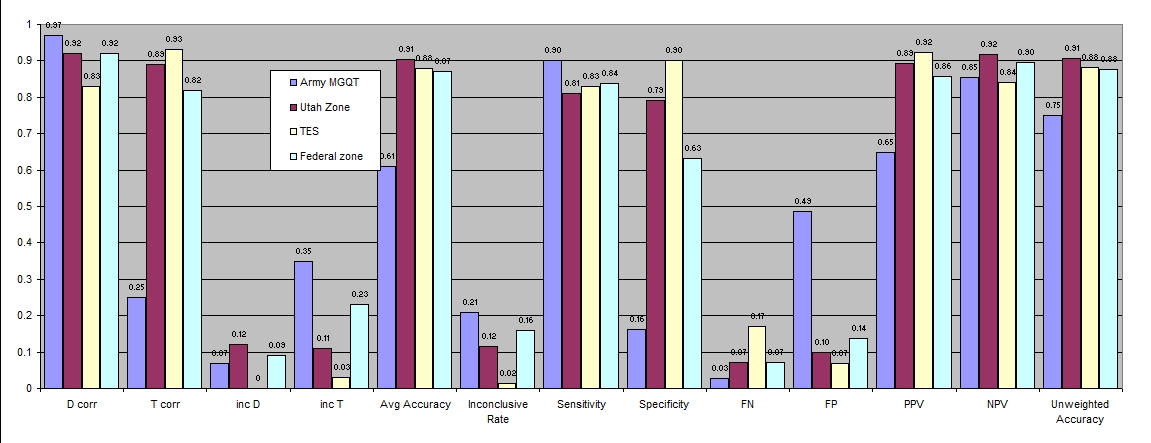

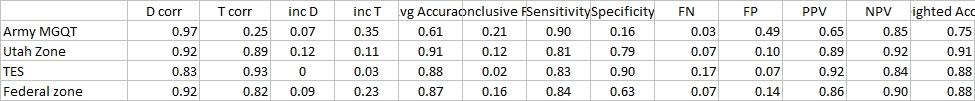

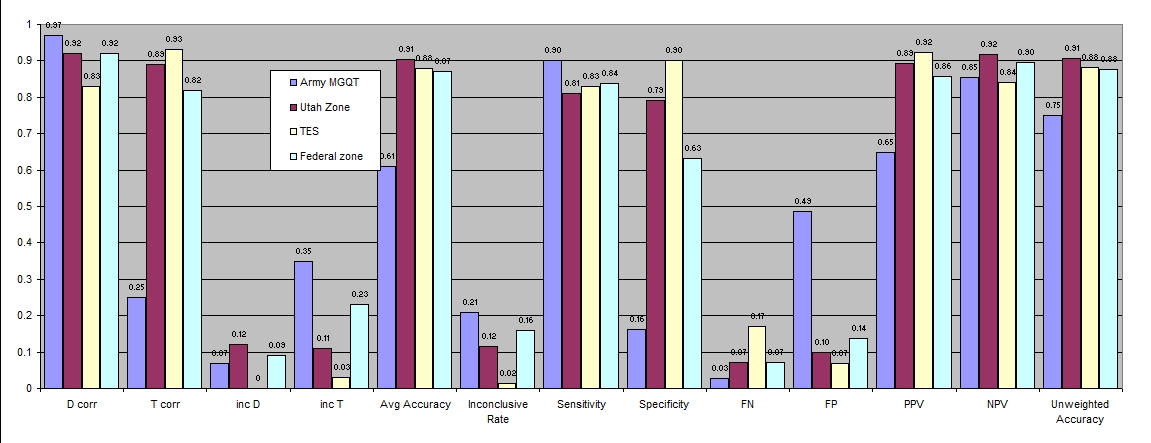

Like many, I'm not a big proponent of the Army test for most purposes. However, I've been saying for a while that the .61 figure doesn't seem like it gives a correct impression of the technique. We know it has high sensitivity and weaker specificity, but there is more to the story. It may not be as bad as it has been reported.So, Out of fairness to the venerable Army MGQT (you-fail technique)... Using the data reported by Krapohl (2006), I can reproduce his average accuracy rate for the Army MGQT (.61) by the simple average of % correct for deceptive cases and the % correct for truthful cases. However, this may not be the correct way to calculate the unweighted mean accuracy. Look at the table below. The four sets of data bars to the left are the data reported by Krapohl (2006). The fifth set of data bars is my replication of the simple average accuracy reported by Krapohl, and the sixth set is the average INC rate. The next several sets of data bars are my calculation of common accuracy metrics. The last set of data bars is my calculation of mean unweighted accuracy, using the average of PPV and NPV (not the average of % correct). What you'll notice is that most of the numbers are very close for the simple average %-correct method and the unweighted mean of PPV and NPV method. The Army MGQT is the technique for which they two methods produce substantially different results for the two calculations. Why? Because the INC and error rates for truthful cases is much higher for the Army MGQT than the other techniques. Why this is the case is another matter, and has to do with the number of issues and the all or any spot scoring rules, but that's a longer discussion. I think it's possible that the reported numbers were calculated using a simple method that is not ideal. It is easy to do this, because for most circumstances the difference is negligible and unlikely to be noticed. The point is that the mean unweighted accuracy can be estimated as .75, which - if we did the math with a reasonable sample size, will be significantly greater than chance. People who have used the Army may have there own sense about whether .61 or .75 seems to more closely reflect their experience. Look more closely at the data and you'll see that although the sensitivity to deception is .97, PPV is lower, meaning that an expected portion of deceptive results will be incorrect. Even though the specificity rate is low, the low false-negative rate, means that NPV (the probability that a truthful result is correct) is nice and high (.89) Why, you ask, do we make this so complicated, because the average unweighted accuracy is more resistant to base-rates than simple % correct rates - meaning it is easier to imagine or generalize how accuracy will be observed in field settings with unknown base-rates. All of these values can be calculated rather easily from the data reported in Krapohl (2006).

Bottom line: it may not be as bad as it seems. .02 r

------------------

"Gentlemen, you can't fight in here. This is the war room."

--(Stanley Kubrick/Peter Sellers - Dr. Strangelove, 1964)

[This message has been edited by rnelson (edited 07-27-2009).] IP: Logged |

rnelson

Member

|

posted 07-28-2009 06:05 AM

posted 07-28-2009 06:05 AM

Interpretation of the above WONK.Army MGQT estimates: Overall test accuracy: ~.75 Proportion of truthful persons that pass: ~.16 The reasons that number is so low have to do with the test structure and the math. Any INC question, and any error, and it's game-over for the truthful guy. More questions = more problems. That is, as long as we continue to limit polygraph scoring to math that we learned in the second grade (simple addition of positive and negative numbers). There are solutions to this limitation, but we'll have to be willing to grow up and learn them. Here is the biggest concern. Proportion of truthful persons that don't pass: ~.49 Not that great. However, Proportion of truthful decision that are correct: ~.85 Is not that bad. Here is the good part, Proportion of deceptive persons that pass (FNs): ~.03 And, Proportion of of deceptive persons caught: ~.9 Proportion of deceptive decisions that are correct: ~.65 r ------------------

"Gentlemen, you can't fight in here. This is the war room."

--(Stanley Kubrick/Peter Sellers - Dr. Strangelove, 1964)

IP: Logged |

Bill2E

Member

|

posted 07-28-2009 08:17 AM

posted 07-28-2009 08:17 AM

"Here's the good part"? Looks rather dismal to me all around. I'm really looking at going to the DLC persoally, and the instructions I have recieved on the administration of the DLC are good. Just have to attend a training session and then try it out, do some math and implement it with my agency. IP: Logged | |

Polygraph Place Bulletin Board

Polygraph Place Bulletin Board

Professional Issues - Private Forum for Examiners ONLY

Professional Issues - Private Forum for Examiners ONLY

APA School Curriculum Standardization

APA School Curriculum Standardization